regularization machine learning example

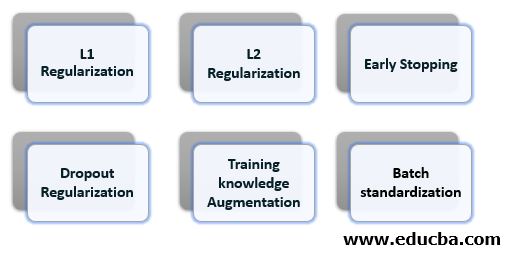

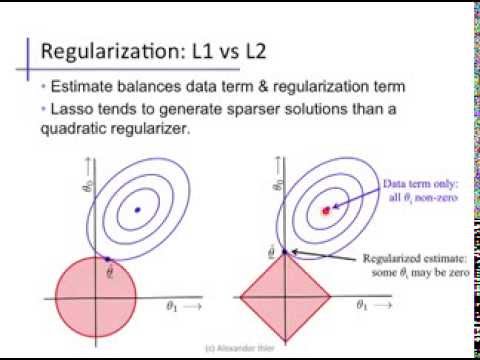

This technique discourages learning a. It adds an L1 penalty that is equal to the absolute value of the magnitude of coefficient or simply restricting the size of coefficients.

Regularization In Machine Learning Geeksforgeeks

1 2 w yTw y 2 wTw This is also known as L2 regularization or weight decay in neural networks By re-grouping terms we get.

. Regularization in Machine Learning. Dataset House prices dataset. For example a linear model with the following weights.

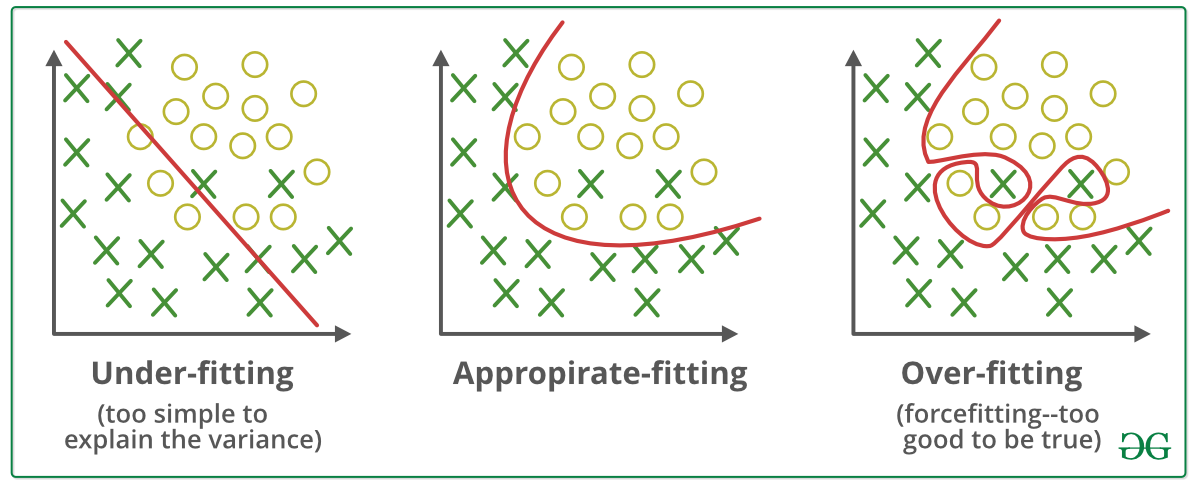

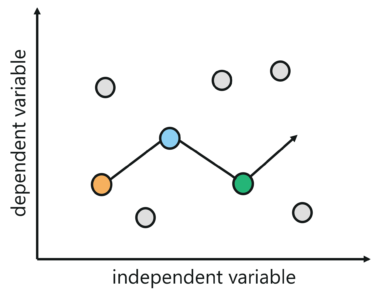

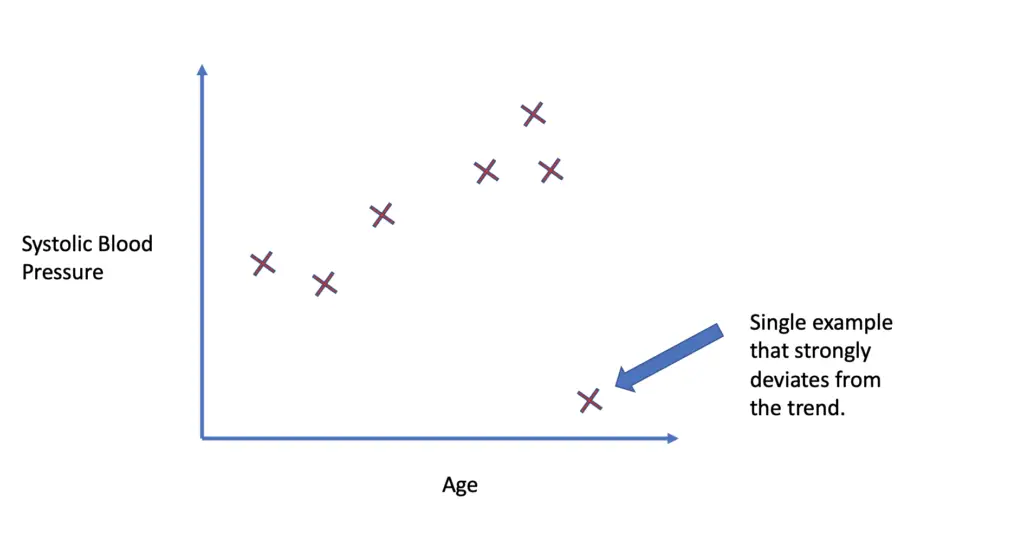

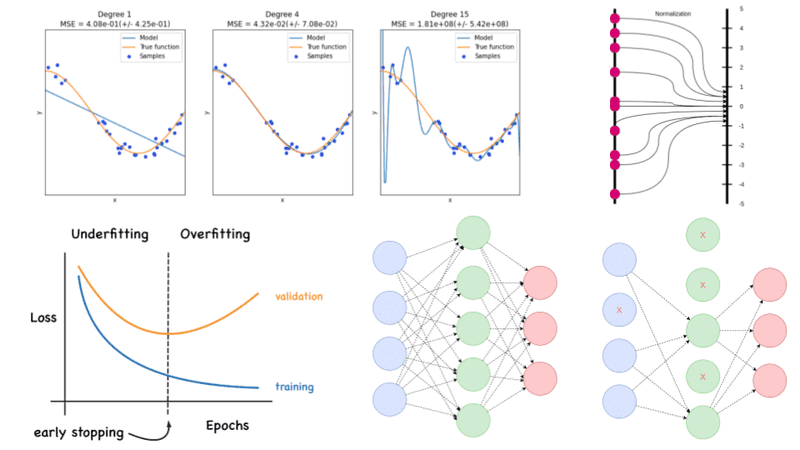

This problem might have a. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. It means the model is not able to predict the output when.

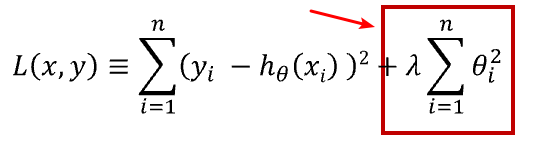

This allows the model to not overfit the data and follows Occams razor. 𝑙2 loss with 𝑙2 regularization min 𝜃 𝐿 𝑅 1 𝑛 𝑖1 𝑛 𝑓𝜃 𝑖 𝑖 2𝜆 2 2 Correspond to a normal likelihood 𝑝 and a normal prior 𝑝. Regularized cost function and Gradient Descent.

Concept of regularization. This penalty controls the model complexity - larger penalties equal simpler models. The model is usually trained using the train dataset and is tested with the test dataset.

Regularization is one of the most important concepts of machine learning. Gradient Descent Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. Heres why Regularization is an important step in Machine Learning.

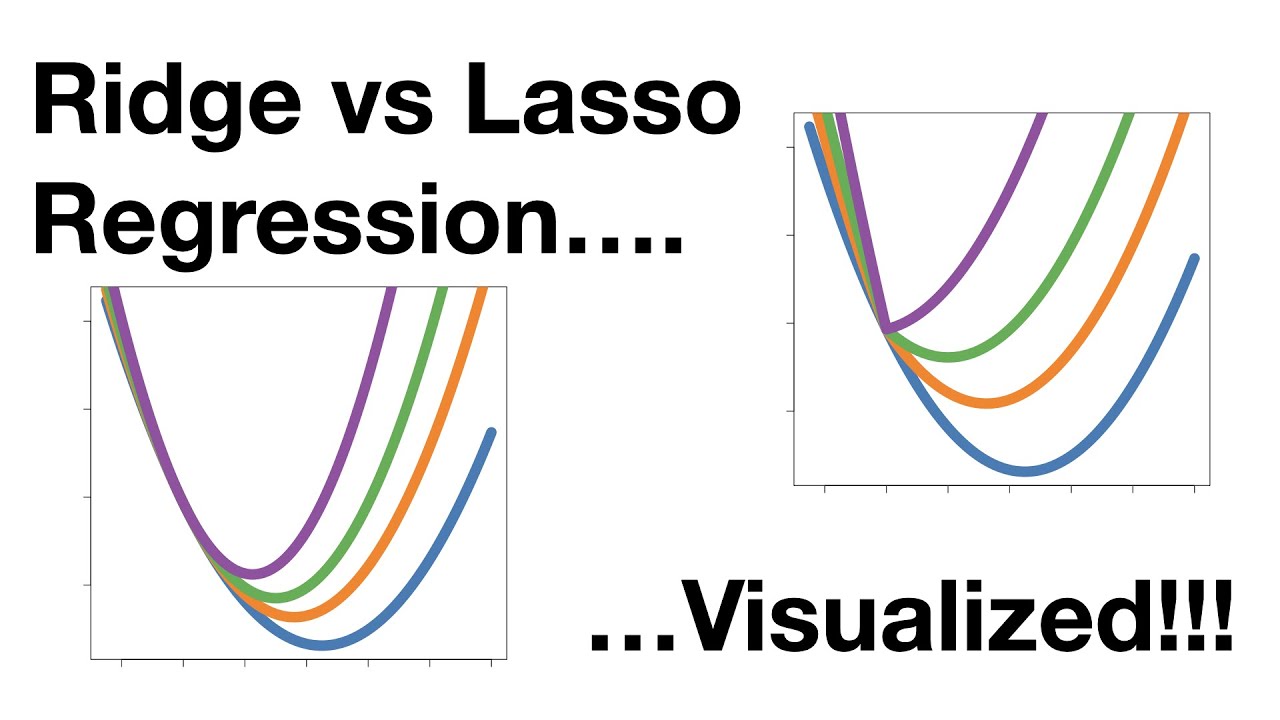

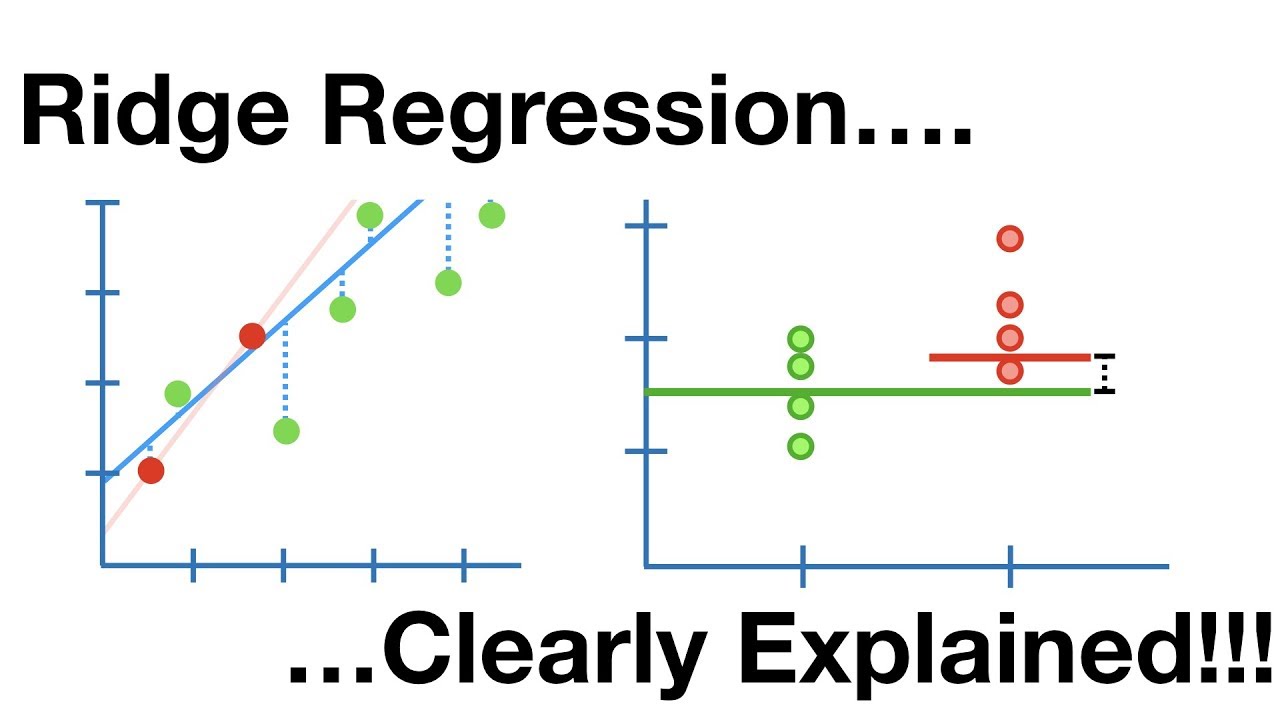

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. For example Lasso regression implements this method. In machine learning regularization problems impose an additional penalty on the cost function.

The simple model is. This video on Regularization in Machine Learning will help us understand the techniques used to reduce the errors while training the model. W 1 02 w 2 05 w 3 5 w 4 1 w 5 025 w 6 075 Has an L2 regularization term of 26915.

Regularization is essential in machine and deep learning. Consider this very simple example. It is not a complicated technique and it simplifies the machine learning process.

Another extreme example is the test sentence Alex met Steve where met appears several times in. It is a technique to prevent the model from overfitting by adding extra information to it. Z b0 b1 x1 b2 x2 b3 x3 Y 10 10 e-z Here b0 b1 b2 and b3 are weights which are just numeric values that must be determined.

Let us take the example of housing prices using Linear Regression. You will learn by. A simple relation for linear regression looks like this.

It adds an L2 penalty which is equal to the square of the magnitude of coefficients. Regularization is a form of regression that regularizes or shrinks the coefficient estimates towards zero. J Dw 1 2 wTT Iw wT Ty yTw yTy Optimal solution obtained by solving r wJ Dw 0 w T I 1 Ty.

Using cross-validation to determine the regularization coefficient. Setting up a machine-learning model is not just about feeding the data. You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning.

1- If the slope is 1 then for each unit change in x there will be a unit. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. When you are training your model through machine learning with the help of artificial neural networks you will encounter numerous problems.

For more discussion on bias-variance trade-off and linear regression you can select one or more of the books I discuss in my blog post titled The Best Books For Machine Learning for Both Beginners and Experts. Where a is the slope of this line look to the figures below. This article aims to implement the L2 and L1 regularization for Linear regression using the Ridge and Lasso modules of the Sklearn library of Python.

When you train a machine learning. Regularization for linear models A squared penalty on the weights would make the math work nicely in our case. Before we explore the concept of regularization in detail lets discuss what the terms learning and memorizing mean from the perspective of machine learning.

Regularization as Bayesian prior Example. In words you compute a value z that is the sum of input values times b-weights add a b0 constant then pass the z value to the equation that uses math constant e. L2 regularization or Ridge Regression.

For example Ridge regression and SVM implement this method. To learn more about regularization to linear and non-linear models go to the online courses page for Machine Learning. Regularization for Machine Learning.

Let us consider that we are developing a machine learning model. In the context of machine learning the term regularization refers to a set of techniques that help the machine to learn more than just memorize. L1 regularization or Lasso Regression.

Importing the required libraries. L2 and L1 regularization. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization Machine Learning Know Type Of Regularization Technique

Regularization In Machine Learning Regularization In Java Edureka

Regularization A Fix To Overfitting In Machine Learning

Linear Regression 6 Regularization Youtube

Regularization Machine Learning Know Type Of Regularization Technique

Regularization In Machine Learning Regularization In Java Edureka

Introduction To Regularization Methods In Deep Learning By John Kaller Unpackai Medium

Difference Between L1 And L2 Regularization Implementation And Visualization In Tensorflow Lipman S Artificial Intelligence Directory

Regularization In Machine Learning Programmathically

What Is Regularization In Machine Learning Quora

Understand L2 Regularization In Deep Learning A Beginner Guide Deep Learning Tutorial

Regularization Techniques For Training Deep Neural Networks Ai Summer

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Which Number Of Regularization Parameter Lambda To Select Intro To Machine Learning 2018 Deep Learning Course Forums

Regularization Part 1 Ridge L2 Regression Youtube

Machine Learning Regularization And Regression Youtube

Coursera S Machine Learning Notes Week3 Overfitting And Regularization Partii By Amber Medium